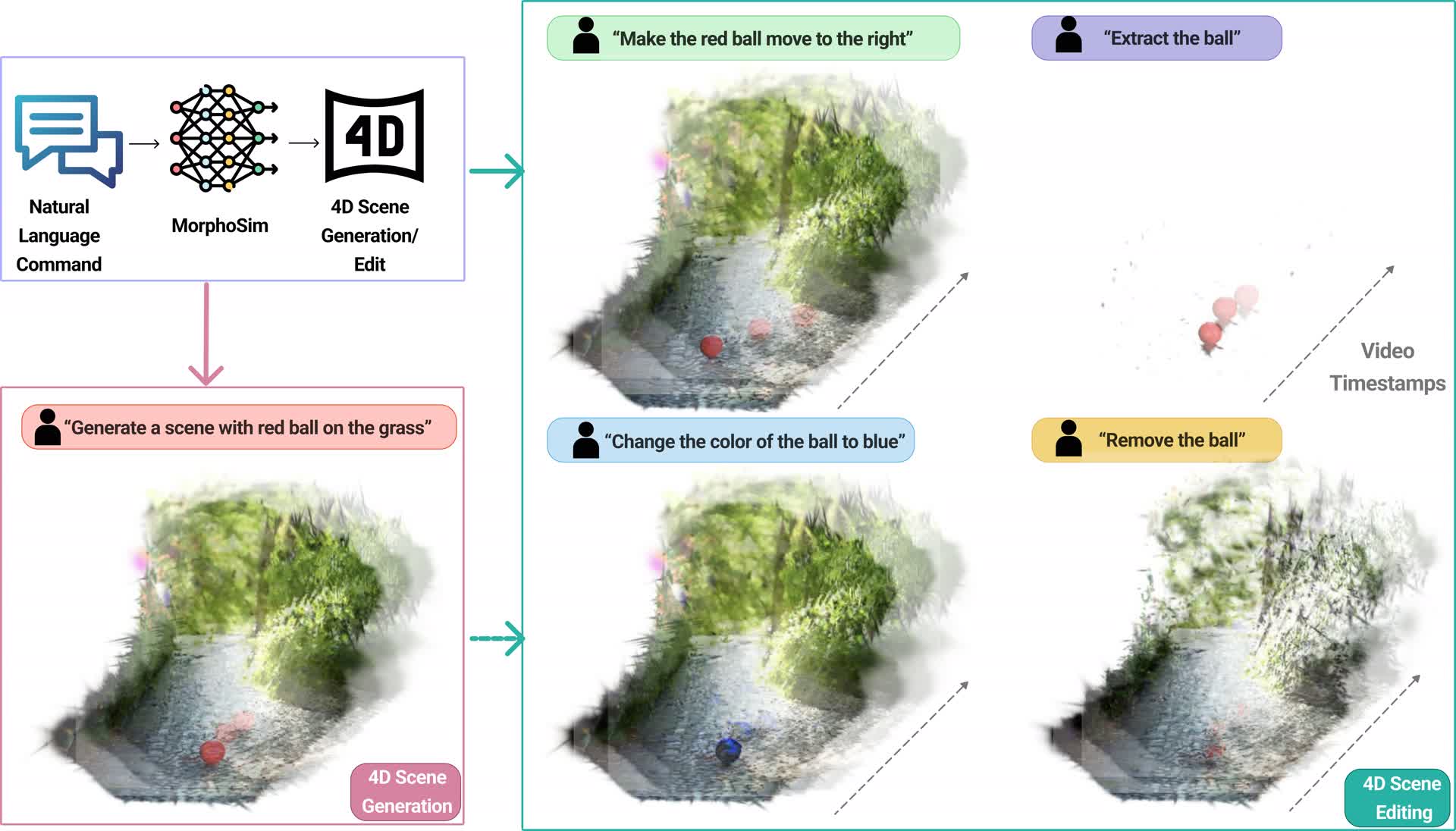

Figure 1: MorphoSim can do interative and controllable generation and editing.

Overview of MorphoSim

Overview of MorphoSim

Figure 2: Overview of the MorphoSim pipeline with Command Parameterizer, Scene Generation, and Scene Editing modules.

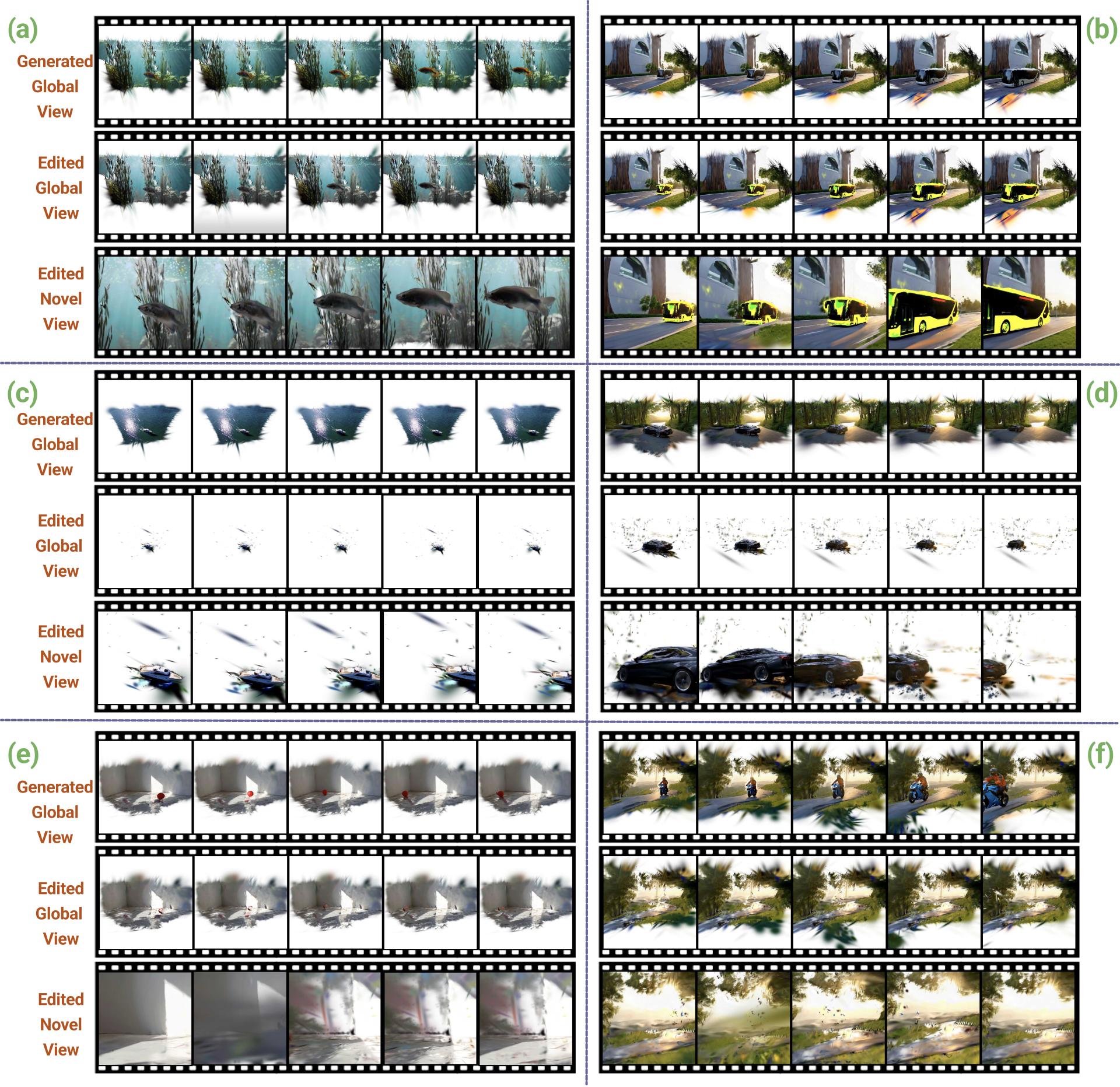

Examples

Examples

Figure 3: MorphoSim can do color editing, object extraction, and removal.

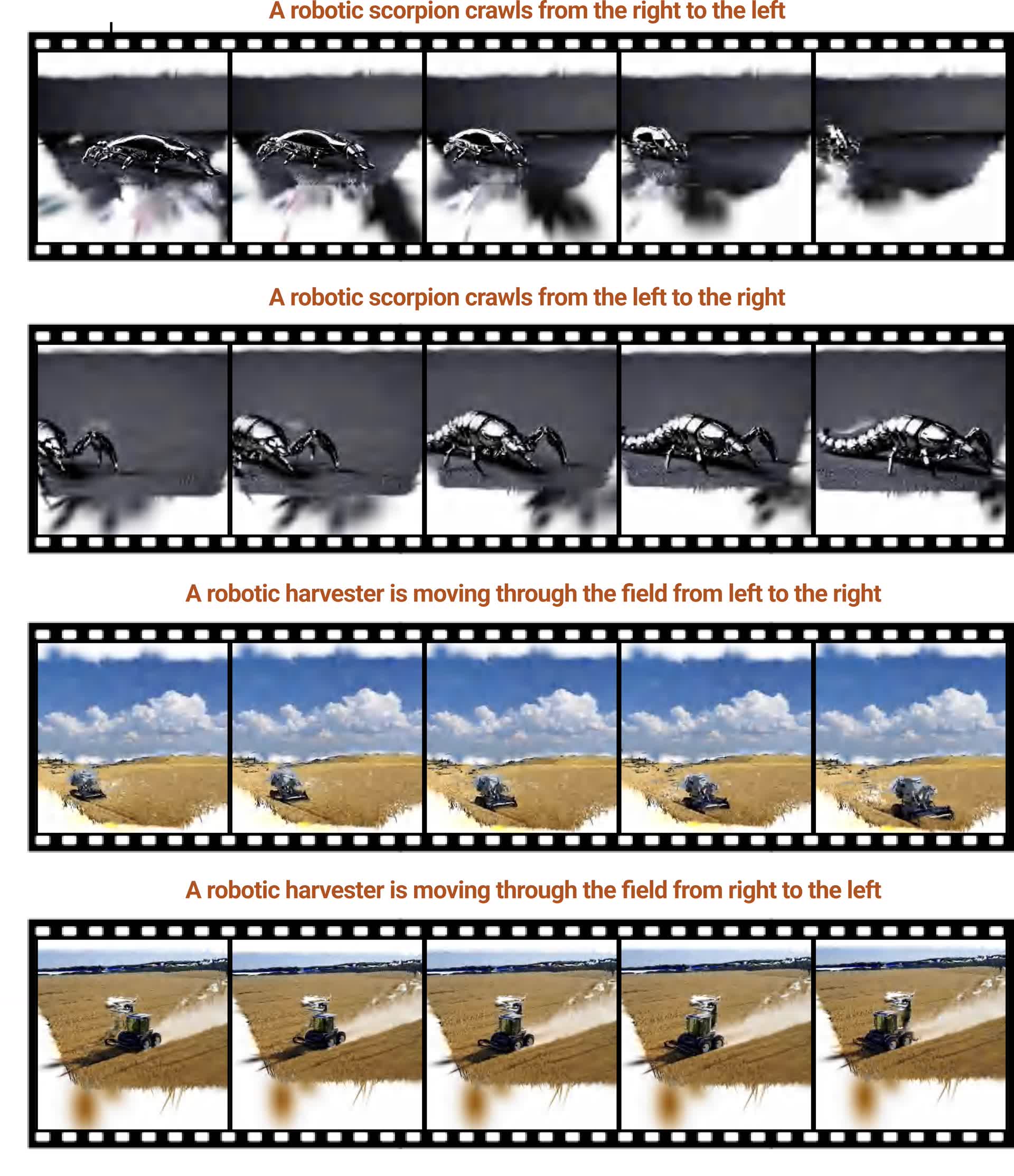

Figure 4: MorphoSim can do motion control during generation.

BibTeX

@article{he2025morphosim,

title = {MorphoSim: An Interactive, Controllable, and Editable Language-guided 4D World Simulator},

author = {He, Xuehai and Zhou, Shijie and Venkateswaran, Thivyanth and Zheng, Kaizhi and Wan, Ziyu and Kadambi, Achuta and Wang, Xin Eric},

year = {2025},

journal = {arXiv preprint arXiv:2510.04390}

}